2012 年, AlexNet 横空出世。这个模型的名字来源于论⽂第一作者的姓名 Alex Krizhevsky。AlexNet 使⽤了 8 层卷积神经⽹络,并以很⼤的优势赢得了 ImageNet 2012 图像识别挑战赛冠军。

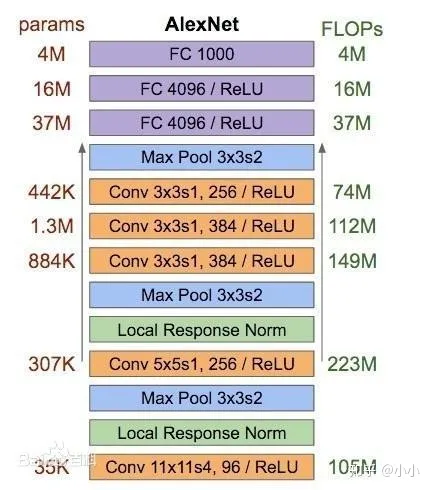

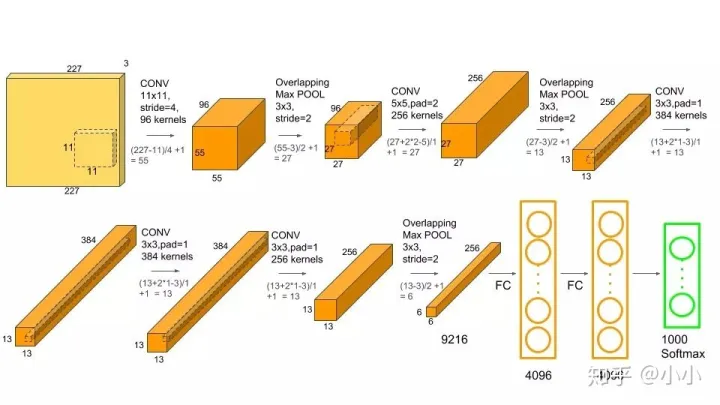

AlexNet网络结构

Alexnet模型由5个卷积层和3个池化Pooling 层 ,其中还有3个全连接层构成。AlexNet 跟 LeNet 结构类似,但使⽤了更多的卷积层和更⼤的参数空间来拟合⼤规模数据集 ImageNet。它是浅层神经⽹络和深度神经⽹络的分界线。

AlexNet代码实现

import torch

import torch.nn as nn

import torch.nn.functional as F

class AlexNet(nn.Module):

def __init__(self, p):

super(AlexNet, self).__init__()

# AlexNet模型由五个卷积层,三个池化层,三个全连接层构成

self.conv1 = nn.Conv2d(1, 96, kernel_size = (5, 5), stride = 1)

self.pool1 = nn.MaxPool2d(kernel_size=(2, 2), stride = 2) # 使用池化层减小输入的高和宽

self.conv2 = nn.Conv2d(96, 256, kernel_size = (5, 5), stride = 1, padding = 2)

self.pool2 = nn.MaxPool2d(kernel_size = (2, 2), stride = 2)

self.conv3 = nn.Conv2d(256, 384,kernel_size = (3, 3), stride = 1, padding = 1)

self.conv4 = nn.Conv2d(384, 384, kernel_size = (3, 3), stride = 1, padding = 1)

self.conv5 = nn.Conv2d(384, 256, kernel_size = (3, 3), stride = 1, padding = 1)

# 连续三个卷积层,使用更小的卷积窗口,除最后一个卷积层外,都进一步增大了输出通道数

self.pool3 = nn.MaxPool2d(kernel_size = (2, 2), stride = 2)

# 全连接层

self.dropout = nn.Dropout(p = p)

self.fc1 = nn.Linear(256*3*3, 1024)

self.fc2 = nn.Linear(1024, 1024)

self.fc3 = nn.Linear(1024, 10)

def forward(self, x):

x = self.conv1(x)

x = self.pool1(F.relu(x))

x = self.conv2(x)

x = self.pool2(F.relu(x))

x = self.conv3(x)

x = F.relu(x)

x = self.conv4(x)

x = F.relu(x)

x = self.conv5(x)

x = self.pool3(F.relu(x))

x = torch.flatten(x, 1)

x = self.fc1(F.relu(x))

x = self.dropout(x)

x = self.fc2(F.relu(x))

x = self.dropout(x)

x = self.fc3(F.relu(x))

return x